While starting this post I realized I have been maintaining personal infrastructure for over a decade!

Most of the things I’ve self-hosted is been for personal uses. Email server, a blog, an IRC server, image hosting, RSS reader and so on. All of these things has all been a bit all over the place and never properly streamlined. Some has been in containers, some has just been flat files with a nginx service in front and some has been a random installed Debian package from somewhere I just forgot.

When I decided I should give up streaming services early last year, I realized I should rethink a bit of the approach and try streamline how I wanted to host different things.

The goal is to have personal infrastructure and services hosted locally at home. Most things should be easy to setup, but fundamentally I’m willing to trade a bit of convenience for the sake of self-hosting. I also have a shitty ISP at home that NATs everything, so I need to expose things to the internet through a wireguard tunnel, and preferably host things in a way where I don’t have to think about it.

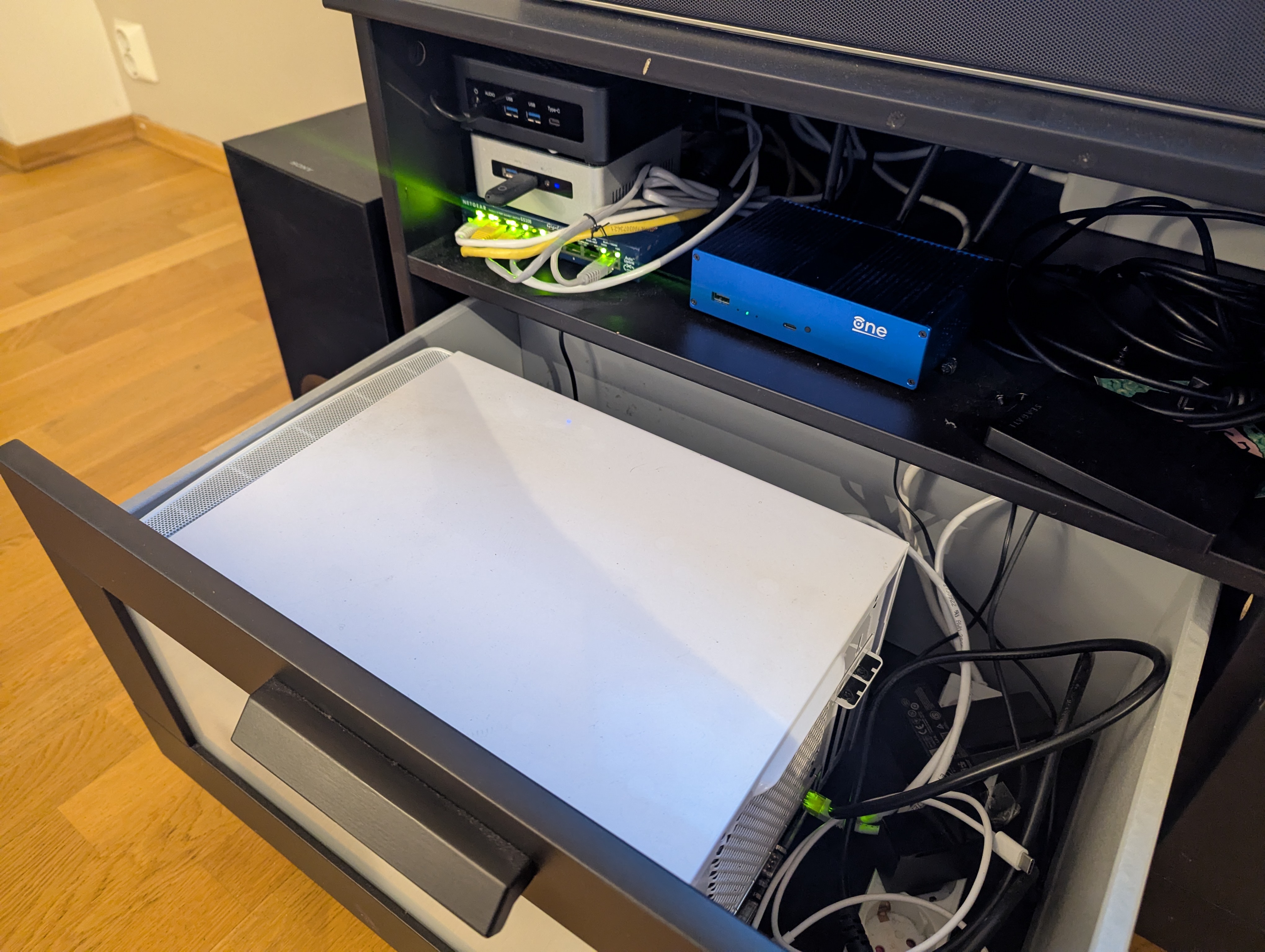

The hardware I’m running this infra on ranges from ancient to fairly modern.

- A NAS build with 2x8 TB disk and all hardware from what I think is 2012?

- Intel NUC from 2015. Intel i5-6260U and 16 GB RAM

- AMD NUC with AMD Ryzen 7 8745HS and 64 GB RAM from AliExpress

- OpenWRT One

There are a couple of things I will not go into a lot of details on. For my NAS setup I’m planning on writing up a post about my picture setup. It was mainly created to support my photo editing and storage, and the usage in my infrastructure was not really intended in that regard.

For my network setup I don’t really have anything special. It’s all flat.

I also wrote previously about my DNS setup. And I’m happy to report it has been

working out great. It’s also serving my home domain, .home.arpa, from the same

server, just allow listed my internal IPs. It also has an acme CA setup I intend

to write about at another point.

For more DNS: Self-hosting DNS for no fun, but a little profit!

Incus

I have been using incus (formerly lxd) for several years at this point. It has been my go-to for locally mocking and testing infrastructure, however I had never really used it to host personal services. With the introduction of OCI container support all this has become easier!

I can pretty much host all my services either as a normal lxc container, a QEMU VM or a OCI container pulled from a container registry.

I’ve been running incus in various configurations for years at this point. It’s super reliable and I’ve never had to be concerned about updates between versions of things suddenly breaking. This has been super important for me to just have infrastructure I can rely on without having to think very hard about it.

The cluster encompasses the AMD NUC (called amd) and the Intel NUC (called

byggmester).

λ ~ » incus cluster list -c nuas

+------------+---------------------------+--------------+--------+

| NAME | URL | ARCHITECTURE | STATUS |

+------------+---------------------------+--------------+--------+

| amd | https://10.100.100.3:8443 | x86_64 | ONLINE |

+------------+---------------------------+--------------+--------+

| byggmester | https://10.100.100.2:8443 | x86_64 | ONLINE |

+------------+---------------------------+--------------+--------+

If I want to spin up a Valkey container I can just launch it from docker directly.

λ ~ » incus remote add docker https://docker.io/ --protocol=oci --public

λ ~ » incus launch docker:valkey/valkey valkey

Launching valkey

λ ~ » incus list valkey -cns4tL

+--------+---------+----------------------+-----------------+------------+

| NAME | STATE | IPV4 | TYPE | LOCATION |

+--------+---------+----------------------+-----------------+------------+

| valkey | RUNNING | 192.168.1.148 (eth0) | CONTAINER (APP) | byggmester |

+--------+---------+----------------------+-----------------+------------+

For various reasons (I don’t know networking very well), my entire network is

flat. On both amd and byggmester I have a bridge device called br0, and

all containers just uses this bridged network as their NIC. Which means I get a

local IP, and my router lets my resolve domains for them.

λ ~ » ping valkey.local

PING valkey.local (192.168.1.148) 56(84) bytes of data.

64 bytes from valkey.local (192.168.1.148): icmp_seq=1 ttl=64 time=0.613 ms

64 bytes from valkey.local (192.168.1.148): icmp_seq=2 ttl=64 time=0.328 ms

64 bytes from valkey.local (192.168.1.148): icmp_seq=3 ttl=64 time=0.500 ms

They use systemd-networkd and the configuration files looks like this:

# /etc/systemd/network/20-wired.network

[Match]

Name=en*

[Network]

Bridge=br0

# /etc/systemd/network/50-br0.netdev

[NetDev]

Name=br0

Kind=bridge

# /etc/systemd/network/50-br0.network

[Match]

Name=br0

[Network]

Address=192.168.1.2/24

Gateway=192.168.1.1

DNS=192.168.1.1

The default profile in Incus just points at this device.

# λ ~ » incus profile show default

description: Default Incus profile

devices:

eth0:

nictype: bridged

parent: br0

type: nic

root:

path: /

pool: default

type: disk

name: default

used_by:

- /1.0/instances/valkey

project: default

In the future when I do a bit more network segmentation incus is probably going to get it’s own vlan. But for a nice and simple setup I think this works just fine.

This works out quite nicely, however I don’t want to remember a bunch of commands for Incus, so clearly we want something a bit more declarative.

Opentofu

Incus has a nice provider for opentofu. It allows us to define our incus resources declaratively and treat everything as ephemeral with all persistent data stored on my NAS.

My terraform files for incus can be found here: https://github.com/Foxboron/ansible/tree/master/terraform/incus

All my services are separated into projects with a common template for permissions, network and default profiles that are shared. This is to easily just have everything similar across the different things I deploy.

It also makes things more compartmentalized when messing around with new infrastructure.

This is all managed by a single opentofu module called project which all my

other opentofu modules is derived from.

https://github.com/Foxboron/ansible/blob/master/terraform/incus/modules/project/main.tf

As a result I’ve accumulated a couple of projects!

λ ~ » incus project list -c ndu

+-------------------+------------------------------------------+---------+

| NAME | DESCRIPTION | USED BY |

+-------------------+------------------------------------------+---------+

| ca | ca project | 4 |

+-------------------+------------------------------------------+---------+

| default (current) | Default Incus project | 48 |

+-------------------+------------------------------------------+---------+

| dns | dns project | 7 |

+-------------------+------------------------------------------+---------+

| immich | immich project | 14 |

+-------------------+------------------------------------------+---------+

| mediaserver | Mediaserver project | 18 |

+-------------------+------------------------------------------+---------+

| miniflux | miniflux project | 6 |

+-------------------+------------------------------------------+---------+

| syncthing | syncthing project | 3 |

+-------------------+------------------------------------------+---------+

| test | | 2 |

+-------------------+------------------------------------------+---------+

| user-1000 | User restricted project for "fox" (1000) | 2 |

+-------------------+------------------------------------------+---------+

I don’t think all of this is interesting, but I thought it would be neat to show off the Immich opentofu setup I wound up with.

Terraform code: https://github.com/Foxboron/ansible/tree/master/terraform/incus/modules/immich

λ ~ » incus list -c ntL

+-------------------------+-----------------+----------+

| NAME | TYPE | LOCATION |

+-------------------------+-----------------+----------+

| auto-album-jpg | CONTAINER (APP) | amd |

+-------------------------+-----------------+----------+

| auto-album-raw | CONTAINER (APP) | amd |

+-------------------------+-----------------+----------+

| database | CONTAINER (APP) | amd |

+-------------------------+-----------------+----------+

| immich | CONTAINER (APP) | amd |

+-------------------------+-----------------+----------+

| immich-machine-learning | CONTAINER (APP) | amd |

+-------------------------+-----------------+----------+

| redis | CONTAINER (APP) | amd |

+-------------------------+-----------------+----------+

As incus supports docker containers, it’s trivial to translate upstream docker-compose files to opentofu files that can be deployed. This is great and really makes me not have to think about how to deploy infrastructure when they often just provide a docker setup anyway.

The setup for the valkey container that we effectively launched earlier looks like this:

resource "incus_image" "redis" {

# This references the main project name, "immich" in this case

project = incus_project.immich.name

alias {

name = "redis"

}

source_image = {

remote = "docker"

name = "valkey/valkey:8-bookworm"

}

}

resource "incus_instance" "immich_redis" {

name = "redis"

image = incus_image.redis.fingerprint

project = incus_project.immich.name

target = "amd"

config = {

"boot.autorestart" = true

}

}

Apart from the confusing name “redis”, it’s all fairly straight forward. We

define the image, and then define an instance. Rest is all taken care of by the

default incus profile that includes a network setup and options for the root

disk.

Now that we have an immich instance running locally, we might want to expose it. Hosting all of this on a local network is cool, but we actually want to have some of this exposed to the internet as well.

Network and exposing things

The main goal of this is to self-host internet facing services, as well as internal services. I have been trying a bunch of things. Less clever ideas as well! I ended up bridging my local network to the hackerspace.

Turns out liberating my DHCP leases to the server racks is basement of our hackerspace is a less clever idea.

However Matthew Garrett ended up posting about doing a wireguard point-to-site VPN where you forward all traffic that hits the box into your local network.

Locally hosting an internet-connected server

When you want to expose services to the internet from your local network you end up with having to deal with shitty ISPs that NAT all the traffic and have floating IPs that change. This makes for tedious work!

Hosting a reverse tunnel from a small VPS box that can have a static IPv4 and IPv6 you have a more reliable connection and can pretend the IPs are yours.

What I have currently done is that I have provisioned a small VPS at my local

hackerspace and given it an IPv4 and IPv6 address. I have then made a wireguard

tunnel to my byggmester node as mjg describes in his blog post.

On byggmester I host nginx which just reverse proxies things I want internet

exposed into my incus servers. I try to resolve things towards my internal DNS

server so I don’t have to retain IPs in my nginx configuration.

With nginx-acme I can also largely

ignore certbot for TLS certificates and let nginx deal with this itself. This

makes for fairly simple reverse proxying for services I want to expose

externally.

Some snippets from my nginx setup:

# /etc/nginx/nginx.d/10-acme.conf

acme_issuer letsencrypt {

uri https://acme-v02.api.letsencrypt.org/directory;

contact root@linderud.dev;

state_path /var/lib/nginx/acme;

accept_terms_of_service;

}

acme_shared_zone zone=ngx_acme_shared:1M;

server {

listen 80;

location / {

return 404;

}

}

# /etc/nginx/snippets/acme.conf

acme_certificate letsencrypt;

ssl_certificate $acme_certificate;

ssl_certificate_key $acme_certificate_key;

ssl_certificate_cache max=2;

# /etc/nginx/nginx.d/30-bilder.linderud.dev.conf

server {

[listen](listen) 443 ssl;

listen [::]:443 ssl;

server_name bilder.linderud.dev;

access_log /var/log/nginx/bilder.linderud.dev/access.log;

error_log /var/log/nginx/bilder.linderud.dev/error.log;

include snippets/acme.conf;

include snippets/sslsettings.conf;

client_max_body_size 50000M;

resolver 192.168.1.1 ipv6=off;

location / {

set $immich immich.local;

proxy_pass http://$immich:2283;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_redirect off;

}

}

This just leaves my static files!

Websites over syncthing?

I have a couple of static websites, like this blog. For years I’ve pushed it straight to git and then run an ansible role that would build my page and push it. For years I contemplated just moving this entire thing to Github pages, but with the current direction of Github it does not spark joy.

And like, having an entire CI setup for a couple of static files seems like overkill. Clearly we can do worse^wbetter!

I think I was exposed to this idea by someone in Arch Linux years ago, but I

have a single user system. Root is my oyster and I can do whatever I want with

any directory on my system. I have /srv! According to somewhere it should

“store general server payload”. Okay.

Lets mkdir /srv/linderud.dev and serve it over syncthing to my webserver!

λ /srv » tree -L2

.

└── linderud.dev

├── blog <- this blog

├── coredns01 <- my dns server configs (long story)

└── pub <- my public/shared files

Serving this blog now just becomes a matter of building hugo with an output directory!

$ hugo build -d /srv/linderud.dev/blog/

Done! It works. Published my webpage. No service dependency. No CI/CD setup. No Github action runners. No ansible playbooks!

I don’t think this is a particularly novel idea. But I like the simplicity of this compared to other options, and makes my computers and websites feel like a proper network instead of convoluted build steps I have to remember.

OpenWRT One

Last but not least, a small note on my network.

OpenWRT One is an OpenWRT developer focused router. I bought it from AliExpress and wrote a opentofu provider for it.

Honestly, I don’t understand a lot of networking so the setup is straight forward and everything is just flat. The terraform setup can be found on my github.

I have some plans to get an openwrt supported switch and maybe do some vlans at some point, but I don’t think there is a lot of interesting things to say about the setup beyond that.

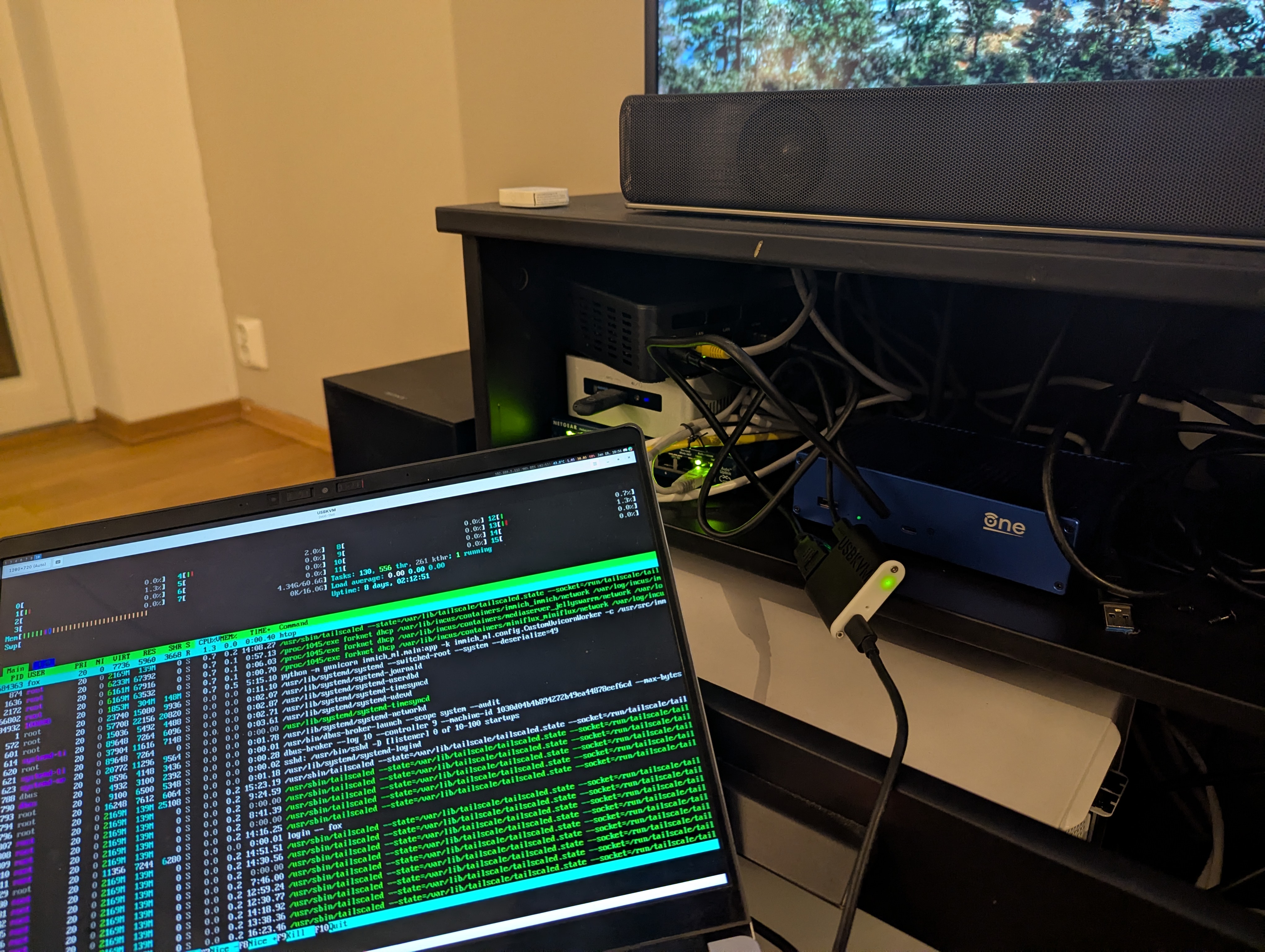

usbkvm

For dealing with a bunch of small computers I have had to lug around a screen and connect to them. During the 38th Chaos Communication Congress I came across a hackerspace selling small usbkvm devices for like 20 euros. I bought two! They have been amazing.

I can just have an HDMI cable and an USB-C cable and I can connect to the computer I need to setup. Super simple, small and neat to work with. Makes dealing with home infrastructure a lot simpler.

Conclusion

This is a quick rundown how I’m currently fixing and setting up my personal infrastructure. This was not intended to be a comprehensive guide, but maybe give out a bit of inspiration for people that have recently started looking at self-hosting more stuff from home. I’ll strive to keep my personal infra as open as possible as I think there is a lot to learn from just reading other peoples code on these things.